Welcome to this week’s “brAIn” dump! This week’s “mAIn event” features a fantastic, thought-provoking guest post by Greg Harrison of creative agency MOCEAN - a Media/AI voice I deeply respect. Next, it’s the “mosAIc” — a collage of curated AI podcasts & stories. Finally, the “AI Litigation Tracker” — updates on key generative AI-focused IP cases by McKool Smith (check out the full “Tracker” here).

But First …

A shockingly bipartisan group of Senators just re-introduced an expanded “No Fakes Act” which now protects individual voices and likenesses from unauthorized creation and use of digital replicas. And here’s the kicker. Even OpenAI, Google & other Big Tech players endorse the bill.

I. The mAIn Event - Is AI Plagiarizing Our Minds? (It’s the Elephant in the Room)

Guest Post by Greg Harrison of creative agency, MOCEAN (check out his Future of Creativity newsletter

In my role at MOCEAN, I’ve taken a curious and experimental position to lead AI initiatives — and recommend other creatives do the same (in the spirit of keeping your enemies closer). But deep down, I’ve always had an uneasy feeling, knowing AI can only do what it does because it was trained on the sum total of human creativity.

You don’t have to look far in the comments of some AI videos online to find posts filled with resentment, decrying the displacement of human creatives, and often with a cancel-culture level of anger. While I don’t share that level of anger, I can relate to the source of it — i.e., that something about generative AI feels wrong.

But what exactly is wrong is hard to pin down: it’s not as simple as AI plagiarizing work, as it’s largely true that generative AI outputs are unique, with virtually infinite variations. And while AI can only do what it does because of its training data, the training data is only analyzed for its general qualities and not retained, so it’s not as simple as AI remixing copyrighted files or storing unlicensed material.

And yet, that sinking feeling persists. So I’d like to put forth a possible answer.

While generative AI isn’t trained to plagiarize creative works directly, it’s plagiarizing something far more personal: how our minds work.

Below, I dive into how I arrived at this conclusion by exploring three common defenses of AI, and what each reveals about how AI works, where it’s headed, and the ultimate ambitions of the major AI players.

Defense #1: Scraping The Internet Is “Fair Use”

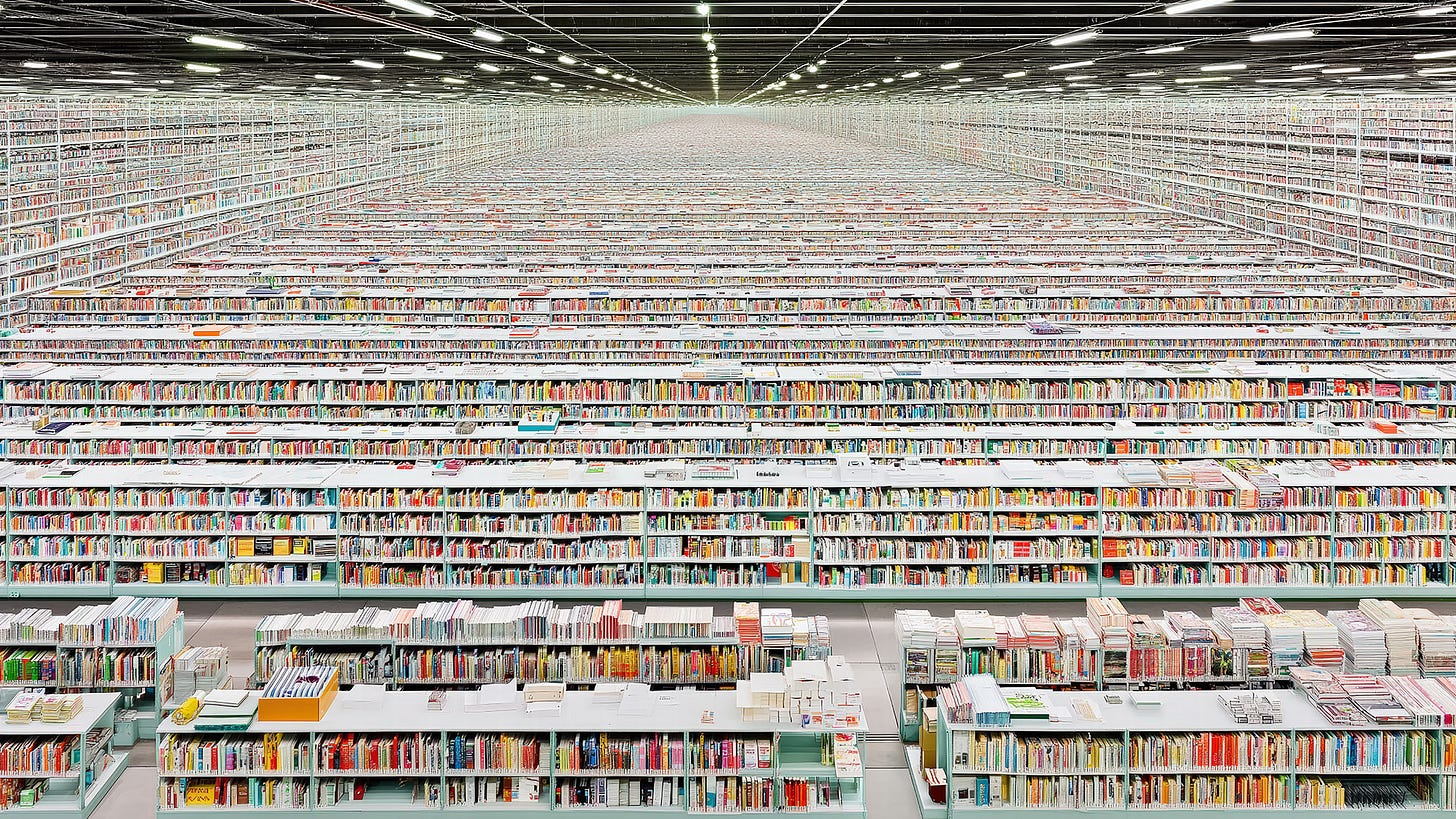

Under “fair use” precedent (from 2010 when courts decided Google could digitize books to provide a searchable database of them), AI companies openly admit they analyze copyrighted works to train their neural networks. But this is where the legal sleight of hand comes in. What’s learned from that analysis is not the copyrighted work, but its “latent features”: the patterns, styles, structure, or approach to the work.

This means AI’s learn things like sentence structure, visual layout, camera movement, color palette, illustrative and photorealistic styles, chord progressions, song structures, and even concepts like tone, voice, aesthetic balance, and physics.

This is explicitly stated in a publicly-available document by the lawyers for Suno, a leading AI text-to-music tool that’s being sued by the major record companies:

“It is no secret that the tens of millions of recordings that Suno’s model was trained on… included recordings whose rights are owned by the Plaintiffs [major record labels]… copies made solely to analyze the sonic and stylistic patterns of the universe of pre-existing musical expression.”

-Suno’s statement from court documents

The use of “solely” tries to downplay the unimaginable scope of analyzing “the universe of pre-existing musical expression,” essentially saying, “Hey, we’re just extracting the soul of every recorded piece of music since the beginning of history to learn how to make new music.” And yet none of this runs afoul of current laws, Suno would say. [Peter’s editorial note: the legality of what Suno is doing is now being litigated in the courts.]

So current models analyze copyrighted material for free, learning from the best of human creativity, then are placed behind the paywalls of for-profit tools that enter the same marketplace of creativity from which its training data came. That sounds anything but fair.

Defense #2: AI Outputs Are Unique, So Not Plagiarism

Generative AI models blend a host of influences from their training data to create outputs that are, by design, generally not exact reproductions of any singular source. This process means that while an AI may have been exposed to extensive libraries of text, images, or videos during its training, it does not store and retrieve these works verbatim. Instead, it combines patterns, structures, and stylistic elements in ways that are inherently variable.

Even if certain distinctive features from the training data appear in the output, they are within a broader, novel context that lacks the coherence of a sourced work. This distinction is crucial because the law typically protects the specific expression of ideas rather than the ideas themselves, and in the case of AI, the output is an amalgamation of influences rather than a clear, identifiable copy.

Put another way, perhaps AI is not yet seen as a kind of plagiarism due to the sheer complexity of this particular kind of theft, to which our society's legal and creative institutions have not yet caught up. It’s like a large-scale data laundering scheme, hidden within the black box of neural networks. [Peter’s editorial note: I addressed this idea/expression copyright dichotomy in last week’s newsletter, where I pointed out that famed anime Hayao Miyazaki’s “style” can, indeed, be infringed by OpenAI’s image generator. I also point out that duplication of specific works isn’t necessary to find infringement.]

Defense #3: AI Learns Like A Person

One of the popular arguments for scraping the internet and training on copyrighted material is that it’s fundamentally the same as how humans learn. Just as people study books, watch films, or examine art to absorb ideas and become inspired to create their own work, AI “learns” from those same sources to generate new content. If we make it illegal for an AI system to learn from art, writing and music, the argument goes, will we next outlaw the film school auteur’s Kubrick references in his work?

But there’s a vast difference between what a single person can do and what a billion dollar “AI-as-person” can do. In the case of generative AI, its learning and creative output are on an industrial scale and speed never before seen in history, and well beyond the ability of any one or even a group of human creatives. [Peter’s editorial note: check out my earlier post, “No, AI Copying Is Not the Same as Human ‘Copying’”.]

But this massive scale is key to every AI company’s ambitions, because of what they’ve discovered — i.e., when you train a neural network on billions of words, images, videos, or songs, these models start to learn something far beyond how any single creative asset is made. In a fireside chat, Ilya Sutskever, one of the founders of ChatGPT, spoke to CEO of NVIDIA Jensen Huang about this very subject:

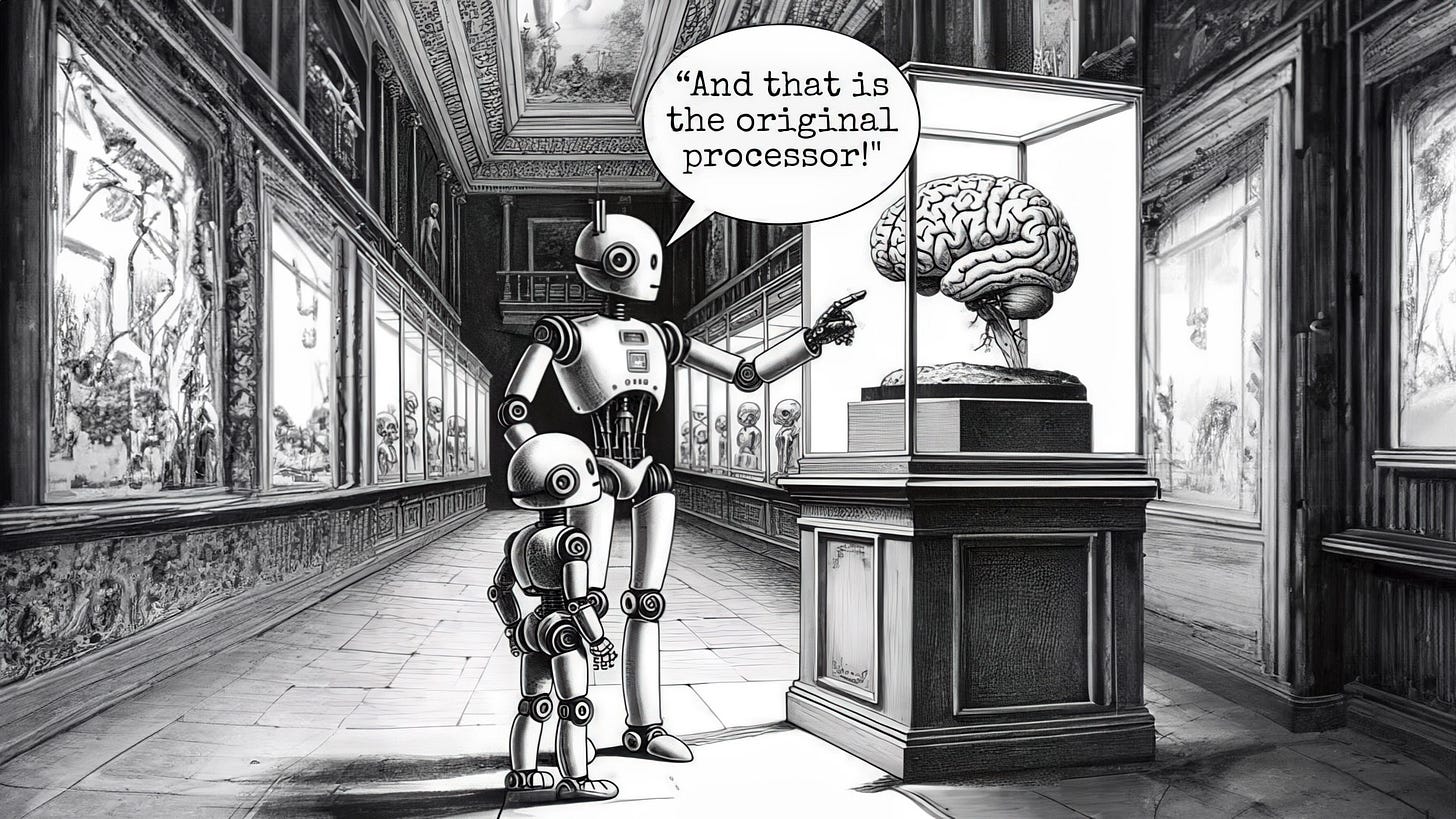

“When we train a neural network to predict the next word from a large body of text, it learns some representation of the process that produced the text.”

-Ilya Sutskever, ChatGPT Co-Founder

And what is “the process that produced the text”? A euphemistic reference to the human mind. Using the legal precedent of “fair use,” AI companies like OpenAI are reverse engineering how our minds work by analyzing everything we’ve created.

For further evidence, here’s the expanded interview clip (click on the link below), where Sutskever explains what large language models actually learn by analyzing the statistical correlations in text (spoiler – it doesn’t just learn how to write):

Excerpt from fireside chat with Ilya Sutskever & Jensen Huang

Click here to watch full interview

The ultimate goal, as Sutskever says in the video, is to build World Models — i.e., with enough data, analysis and scale, AI models aim to effectively simulate the physics as well as the written and visual languages of the world, including the objects, animals, and people that live within them. The developers of Sora, the text-to-video model from the company behind ChatGPT, state this explicitly in their public research:

“Our results suggest that scaling video generation models is a promising path towards building general purpose simulators of the physical world.”

-Sora Developers

Think Midjourney is only trying to be the leading text-to-image generator on the market? This quote from their CEO states their bigger ambitions of a World Model:

“We’re really trying to get to world simulation. People will make video games in it, shoot movies in it, but the goal is to build an open world sandbox.”

-David Holz, Midjourney Founder & CEO

To put a fine point on it, Elon Musk posted the following cartoon to his account on X after an upgrade to his AI offering called Grok:

And this much-maligned Apple “Crush!” ad (click on the link), even though it wasn’t specifically about AI, turns out to be a perfect metaphor for the World Model ambitions of AI companies — crushing all the objects of human creativity into a wafer-thin iPad.

So What Do We Do?

For those that remember the early days of digital music, you could compare this moment in AI to when Napster indiscriminately digitized and distributed songs, throwing the art and business of music into chaos. There’s no doubt Napster was an inflection point that – whether people fought or embraced it – radically transformed the music industry. But Napster also forced a legal and ethical reckoning around compensation for human creativity, one that I think we all feel is critical for AI to move forward in an equitable way.

There are close to 40 lawsuits working their way through the courts that challenge the legality of training on copyrighted material, with some aiming to show how what AI is doing is well outside the intention of the “fair use” precedent. Unfortunately, it could take years for these cases to get resolved, allowing current practices to continue to fuel AI’s explosive grow

I’ve wondered if public sentiment will also play a role, as people wake up to how AI works and what impact it will have on creative industries. Will it be like what happened in the fashion industry when public awareness about the cruelty of animal fur in clothing sparked a backlash that changed industry practices?

While there’s a lot of unknowns, one thing is clear: however sobering the facts of AI may feel, it’s critical for the creative community to engage with AI, understand it, and work together to have an informed voice in the conversation. AI is not going away, and if we confront the benefits and threats of it with open eyes, we won’t either.

What do you think? Send your feedback to Peter at peter@creativemedia.biz.

II. The mosAIc — Peter’s Curated Podcasts, Etc.

(1) Can Creativity & AI Co-Exist?

Click on the video “play” button above to watch/listen to my Interview with host Michael Ashley on the “AI Philosopher” podcast. We focused on AI and music, but we hit all the overall AI/media/entertainment notes too. Think you’ll enjoy it.

(2) Listen to “the brAIn” Podcast Episode, “OpenAI & Studio Ghibli: Yes, ‘Style’ Can Be Infringed”

It’s an enlightening discussion based on last week’s feature article.

(3) Season 4 of My “The Story Behind the Song” Music Podcast Kicks Off TODAY! (my interview with Mike Campbell of Tom Petty & The Heartbreakers)

Today’s episode (season 4, episode 1) features my interview with Mike Campbell, legendary guitarist of Tom Petty & The Heartbreakers, discussing the story behind the band’s breakout track “Refugee.” Other upcoming Season 4 guests include Mark Mothersbaugh of Devo, Susanna Hoffs of The Bangles, Nancy Wilson of Heart, Adam Duritz of Counting Crows, and Bud Gaugh of legendary SoCal punk/ska/reggae band Sublime. What does this have to do with AI? Nothing really. It’s just my human creative antidote and soul cleansing balm for these ever-accelerating tech-tonic times.

You can follow my show on Apple Podcasts, Spotify, and all other podcast networks. You can also check out the official The Story Behind the Song website via this link.

(4) ICYMI - Critical Topics/Important Recent Articles

(i) “The MCP Revolution: Why This Boring Protocol May Change Everything About AI”, by expert Shelly Palmer. The topic may sound dull. But sometimes dull is essential, because Shelly tells us that MCP is the next great top after “agentic AI.”

(ii) “Researchers Suggest That OpenAI Trained AI Models on Paywalled O’Reilly Books,” via TechCrunch. Yes, en masse scraping can, and does, reach paid subscriber only content.

Want to work with Peter? Check this out …

III. AI Litigation Tracker: Updates on Key Generative AI/Media Cases (by McKool Smith)

Partner Avery Williams and the team at McKool Smith (named “Plaintiff IP Firm of the Year” by The National Law Journal) lay out the facts of — and latest critical developments in — the key generative AI/media litigation cases listed below. All those detailed updates can be accessed via this link to the “AI Litigation Tracker”.

The Featured Updates:

(1) The New York Times v. Microsoft & OpenAI

(2) Kadrey v. Meta

(3) Thomson Reuters v. Ross Intelligence

(4) In re OpenAI Litigation (class action)

(5) Dow Jones, et al. v. Perplexity AI

(6) UMG Recordings v. Suno

(7) UMG Recordings v. Uncharted Labs (d/b/a Udio)

(8) Getty Images v. Stability AI and Midjourney

(9) Universal Music Group, et al. v. Anthropic

(10) Sarah Anderson v. Stability AI

(11) Raw Story Media v. OpenAI

(12) The Center for Investigative Reporting v. OpenAI

(13) Authors Guild et al. v. OpenAI

NOTE: Go to the “AI Litigation Tracker” tab at the top of “the brAIn” website for the full discussions and analyses of these and other key generative AI/media litigations. And reach out to me, Peter Csathy (peter@creativemedia.biz), if you would like to be connected to McKool Smith) to discuss these and other legal and litigation issues. I’ll make the introduction.

About Peter’s Firm Creative Media

We represent media companies for generative AI content licensing, with deep relationships and market access, insights and intelligence second to none. We also specialize in market-defining strategy, breakthrough business development and M&A, and cost-effective legal services for the worlds of media, entertainment, AI and tech. Reach out to Peter at peter@creativemedia.biz to explore working with us.

Send your feedback to me and my newsletter via peter@creativemedia.biz.