GenAI & Copyright: Analysis of 10K+ Submissions on "Fair Use" (Key Themes, Sentiment)

EXCLUSIVE: Using AI to Analyze the 10,000+ Submissions Received by the Copyright Office on the Central Issues of Copyrightability & "Fair Use"

Welcome to my “brAIn” on this MLK Day. First, this week’s “mAIn event” — an important exclusive “MUST READ” analysis by NYU professor/expert Howie Singer and NYU Stern School of Business colleagues. Then, the “mosAIc” — a collage of key AI/media stories from last week. Finally, the “AI Litigation Tracker” — critical updates on the key GenAI/media cases by leading law firm McKool Smith (you can also check out the “Tracker” here via this link).

But First …

Last Tuesday, all eyes were on federal Judge Sidney Stein (Southern District of New York) who heard oral arguments on OpenAI’s and Microsoft’s “motion to dismiss” The New York Times copyright infringement case — which is perhaps the single most important of the 40+ ongoing litigations (you can track its ongoing developments in the “AI Litigation Tracker”). Judge Stein made no decision in the courtroom, but it should be coming soon. My prediction? The Judge will reject defendants’ “motion to dismiss” — and The Times case will move forward. Stay tuned.

I. The mAIn event - Analysis of 10K+ Submissions to the Copyright Office on the Key Issues of Copyright & “Fair Use” (Guest Post)

GUEST POST by Howie Singer, Joao Sedoc, Foster Provost of NYU Stern (full bios below)

Background. Last week, Howie Singer reached out to share his and his NYU colleagues’ detailed analysis — leveraging AI — of every word of the 10,000+ submissions received by the U.S. Copyright Office when it requested comment on the copyright and “fair use” issues that surround generative AI’s use of creative works. Given the importance of their analysis — and the fascinating exclusive data they compiled — I asked Howie if I could post his summary in its entirety. He graciously said “yes.” So here it is. It’s an important piece. That’s why I feature it here.

Introduction

If you asked any writer, artist, musician, or technologist what they thought was the most significant technology over the past year, you’d almost certainly get the same answer: Generative Artificial Intelligence (Gen AI). But when it comes to dealing with the copyright issues arising from Gen AI, there is anything but unanimity. The U.S. Copyright Office (USCO) received over 10,000 submissions when they requested comments on these issues in August 2023.

The commenters range from AI start-ups to the largest tech companies in the world, from well-known creators of television series and films to companies holding the rights to millions of copyrighted songs and recordings. And there are hundreds more anonymous contributors. Reading all those submissions would require an enormous amount of time and effort. Identifying the common themes from thousands of pages represents an even greater challenge. The Copyright Office has spent months doing exactly that and is getting ready to publish its understanding of the arguments put forward.

To our understanding, the Copyright Office relied on human analysis. But there is an alternative. We could leverage the very technology that had provoked those thousands of opinions.

Our Proprietary AI-Augmented Approach

We have developed a “Copyright Opinion Research Assistant” (CORA), an AI-based thematic analyzer that relies on OpenAI’s GPT models. It “reads” every page of every submission and summarizes the key themes. CORA identifies specific quotes that support each theme identified for each document, and quantifies the prevalence of the different themes across the comments. Furthermore, CORA provides a case study on applying Large Language Models to this type of problem and demonstrates the crucial role that people play in getting the answers right.

6 Overarching Themes Related to the 10K+ Submissions

CORA identified the following six overarching themes (we combined some lower-level themes and rephrased them, which took about 30 minutes of our time):

THEME 1:

AI developers should obtain explicit consent from creators before using their content for training. This requires a simple opt-in/opt-out mechanism for creators as well as transparency about the sources of training material to ensure ethical uses and to prevent copyright infringement.

THEME 2:

The value of human creativity and artistry needs to be protected from the impacts of AI. AI should be regarded as a tool used by people and should not be credited with authorship. This requires mandatory identification of AI-generated outputs; adapting copyright law (both within the US and internationally) to the current and evolving technological realities and improving regulatory frameworks as well as enforcement mechanisms. Finally, creators are likely to need emotional and professional support when their works are used without agreement or recognition of their contributions.

THEME 3:

Fair compensation models are essential to ensure creators receive rightful royalties and remuneration for the use of their works in AI datasets and outputs. This requires mechanisms to prevent unregulated monetization and the economic disruption and displacement of artists.

THEME 4:

Maintain academic and education integrity by deterring plagiarism and sustaining genuine learning.

THEME 5:

Preserve cultural and artistic heritage against the diluting effects of AI content.

THEME 6:

Implement ethical AI practices to prevent societal harms caused by AI-generated false or manipulated content.

The corpus of comments was (way) too large to be input to even the most capable Large Language Models (LLMs). That probably would not have been effective anyway, given current LLM capabilities. Instead, CORA applied the LLM to batches of comments sequentially, refining the key points as it processed the batches. We know from experience that LLM-based systems can produce different results when run multiple times, even when prompted exactly the same way. So we had CORA perform the same task several times to provide a broader set of key themes to consider. In addition, for each document, CORA identified a specific quote that supported each identified theme in each document, which made it easier to validate the accuracy of the results.

That process resulted in the first six themes listed above which had a decidedly pro-creator point of view.

2 Additional Themes (Focused on Tech-Centric Views)

Those six (themes above) are indeed an accurate thematic characterization of a significant majority of the ten thousand submissions, but they fail to portray the “other side of the story,” which is represented in the smaller number of comments submitted by companies developing AI models, as well as their investors. To add this alternative perspective to the analysis, we ran CORA again using only the submissions from these entities; this produced the two additional themes — and a more balanced picture overall.

THEME 7:

Fair use provisions must be maintained to enable the continued development of AI technologies. Training models on copyrighted material is a transformative use.

THEME 8:

We need to ensure that AI will be allowed to drive significant economic growth and productivity gains across the economy.

More Data Analysis of the Specific Comments

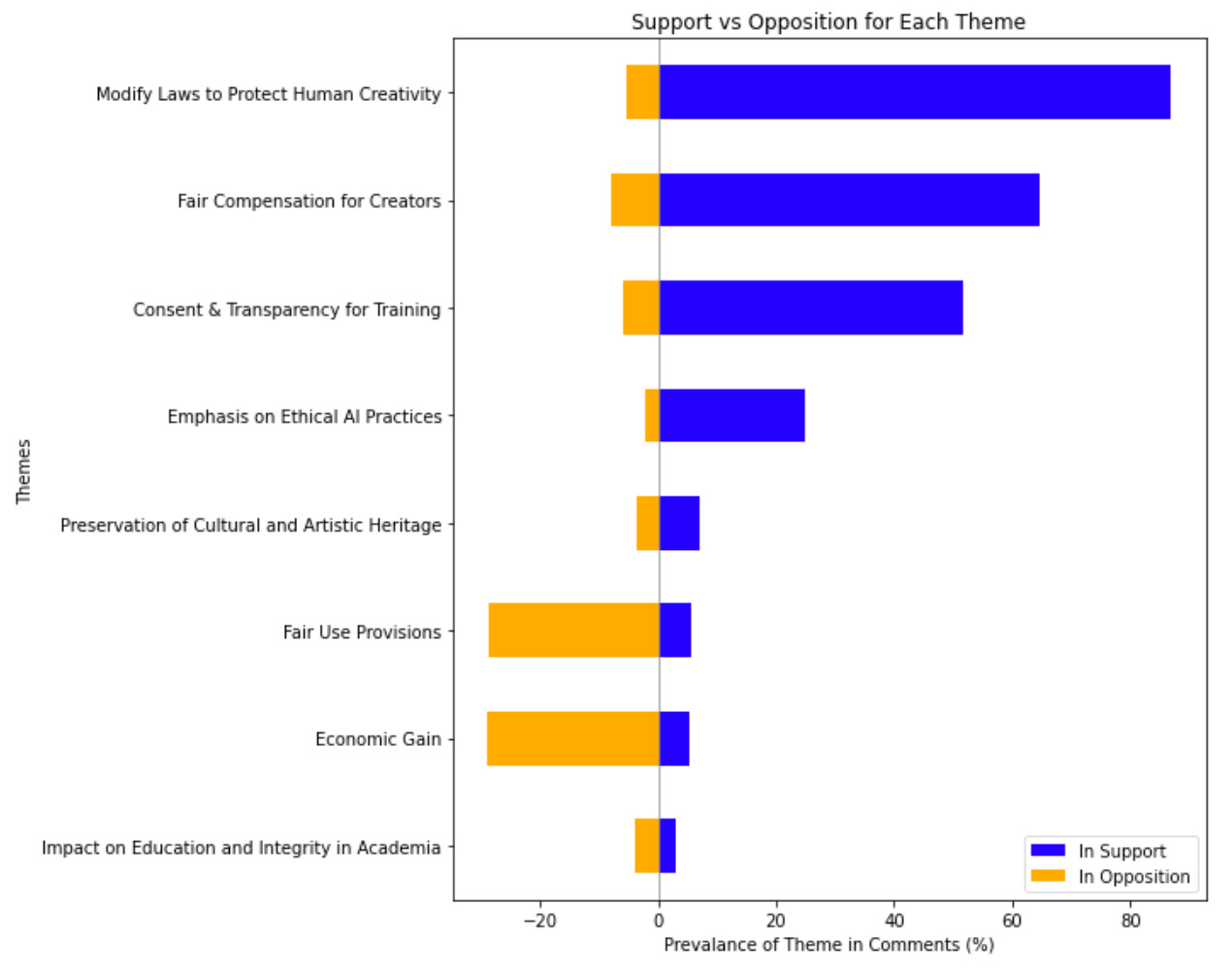

Next, we had CORA count the number of comments that supported and opposed each of the eight themes (Figure 1). This helps to understand why CORA had initially omitted these obviously important ideas. Specifically, we believe that prompting CORA to find the main themes led to giving more emphasis to the large number of submissions with a pro-creator bent.

Figure 1: The percentage of the overall comments that were in support (blue) and in opposition (orange) to each theme. The bars are sorted by the in-support percentages.

As users of AI systems, we do need to keep in mind certain challenges:

Can we be certain that the set of themes CORA provides was indeed an accurate thematic portrayal of the set of many thousands of submissions?

Could some of the themes be “fabrications” that were not actually expressed in the comments?

Even if every theme is well supported by specific references in the documents, are other common themes present in the comments but missing from CORA’s results?

Human “Quality Control” of the AI Analysis (& 1 More “Missing” Theme of Soul)

In principle we could have taken months to read through all the comments to determine whether the results exhibited these errors. However, that would be counter to the main goal of CORA–to help avoid that work. Error analyses are normally statistical anyway, so, we first randomly sampled 100 submissions and had a human expert in art, writing, and technology confirm for each submission: that CORA accurately reflected each attributed theme; that it was citing actual quotes, and that it had not missed any important themes in that document.

Our expert reported: (1) Every attributed quote was correct. (2) The themes attributed to the comments were by-and-large correct. (3) CORA had missed the following very common theme, easily identified by the human analyst:

THEME 9:

Many comments expressed deeply held feelings and “soul.”

Clearly, the complete set of themes CORA identified were consistent with the numerous published analyses of selected comments confirming the validity of our far-more-comprehensive approach. To our knowledge, no prior examination of the submissions has covered all the themes we identified. Moreover, with this additional analysis we can state with some confidence that these are indeed the most common themes. We are confident in that assertion because unlike these other analyses:

CORA actually analyzed every submitted document, attributed themes to each, and quantified the prevalence of themes across all the documents

We evaluated the accuracy of that work;

We assessed whether there were any common themes that were missing.

Post-Analysis Conclusions

We will undoubtedly see increasing use of large language models to distill the key points from large collections of documents. It is simply more efficient than having people complete the task. In this case, it would have taken several person months to read and to analyze all the comments; with CORA the overall process took 10–15 person hours (plus about 30 hours of computer time, costing less than $700). And we hope that those interested in the Copyright Office process will find the thematic analysis of the comments helpful.

Though there is certainly room for further improvements, this undertaking demonstrated the need for integrating human insight to ensure the quality of the results. Without that, we would have missed out on the views of the AI companies and that final theme, and we would not have had high confidence in the overall accuracy of the thematic analysis.

After completing this work, we believe relying solely on AI for this type of analytical task is unwise. But when complemented with humans appropriately, it can be a game changer in terms of distilling massive collections of documents efficiently and effectively.

Authors’ Acknowledgements: Thank you to Ira Rennert and the NYU Fubon Center for supporting work on demystifying AI. Thanks to Caterina Provost-Smith for domain-expert advice and assistance. Thanks to Hanna Provost for editorial assistance.

BIOS: Howie Singer is an Adjunct Professor in the NYU Music Business program and co-author of “Key Changes: The 10 Times Technology Transformed the Music Industry”; João Sedoc is an Asst. Professor of Technology, Operations and Statistics at the Stern School of Business at NYU. His research areas are at the intersection of machine learning and natural language processing; Foster Provost is Ira Rennert Professor of Entrepreneurship and Information Systems and Director, Fubon Center, Data Analytics & AI, at the Stern School of Business at NYU.

II. The mosAIc — 6 Key Stories from Last Week

(1) Is It the Year of the Full-Length AI Movie?

Read this article that describes a Hollywood director’s splashy debut that uses Google’s new AI video model.

(2) New Adidas Video Ad Made 100% by GenAI

Watch this new Adidas ad campaign called “Floral” that is billed as being made entirely by using generative AI — no marketing agency needed. A reality check and sign of things to come.

(3) Hollywood & the Music Industry Take Different Approaches to AI — But Why?

Read this article that lays it out here via Forbes.

(4) Rampant Scraping of Content (& Breaking a Business in the Process)

Read this real-world cautionary tale, brought to you by TechCrunch, about how OpenAI’s bots “crushed” a major website that spent over a decade assembling what it calls the largest database of “human digital doubles” on the web (meaning 3D image files scanned from actual human models).

(5) “Agentic AI” Is Everywhere

One key “must know” theme (and terminology) related to AI and emerging in discussions everywhere is “agentic AI” (AI “agents”). What is it? Read this summary here from Axios. And here’s another very helpful overview of what it is, and what it isn’t. This new reality is coming soon to all industries — including media and entertainment. In fact, it is already here. Read how OpenAI adds agentic AI tasks to ChatGPT via Mashable.

(6) And Even More Predictions About How AI Will Change Business (& Life Itself) in 2025

This article from Fast Company is worth reading.

Follow me on BlueSky via this link.

You can also continue to follow my longer daily posts on LinkedIn via this link.

III. AI Litigation Tracker: Updates on Key Generative AI/Media Cases (by McKool Smith)

Partner Avery Williams and the team at McKool Smith (named “Plaintiff IP Firm of the Year” by The National Law Journal) lay out the facts of — and latest critical developments in — the key generative AI/media litigation cases listed below via this link to the “AI Litigation Tracker”.

(1) Raw Story Media v. OpenAI (about which I wrote at length a couple weeks back via this link)

(2) The Center for Investigative Reporting v. OpenAI

(3) Dow Jones, et al. v. Perplexity AI (about which I wrote at length a couple weeks back via this link)

(4) The New York Times v. Microsoft & OpenAI

(5) Sarah Silverman v. OpenAI (class action)

(6) Sarah Silverman, et al. v. Meta (class action)

(7) UMG Recordings v. Suno

(8) UMG Recordings v. Uncharted Labs (d/b/a Udio)

(9) Getty Images v. Stability AI and Midjourney

(11) Universal Music Group, et al. v. Anthropic

(12) Sarah Anderson v. Stability AI

(13) Authors Guild et al. v. OpenAI

NOTE: Go to the “AI Litigation Tracker” tab at the top of “the brAIn” website for the full discussions and analyses of these and other key generative AI/media litigations. And reach out to me, Peter Csathy (peter@creativemedia.biz), if you would like to be connected to McKool Smith) to discuss these and other legal and litigation issues. I’ll make the introduction.

Check out my firm Creative Media and our AI-focused services, which include representation of media companies for generative AI licensing.

Send your feedback to me and my newsletter via peter@creativemedia.biz.